ALCF Computational Performance Workshop

Held virtually in May, the annual ALCF Computational Performance Workshop is designed to help attendees boost application performance on ALCF systems. With dedicated access to ALCF computing resources, the three-day workshop allowed workshop participants to work directly with ALCF and invited experts to test, debug, and optimize their applications. One of the workshop’s primary goals is to help researchers demonstrate code scalability for INCITE, ALCC, and ADSP project proposals, which are required to convey both scientific merit and computational readiness.

ATPESC 2022

The annual Argonne Training Program on Extreme-Scale Computing (ATPESC) celebrated its 10th anniversary in 2022. The two-week training event offers training on key skills, approaches, and tools needed to design, implement, and execute computational science and engineering applications on high-end computing systems, including upcoming exascale supercomputers. Organized by ALCF staff and funded by the ECP, ATPESC has a core curriculum that covers computer architectures; programming methodologies; data-intensive computing and I/O; numerical algorithms and mathematical software; performance and debugging tools; software productivity; data analysis and visualization; and machine learning and data science. More than 70 graduate students, postdocs, and career professionals in computational science and engineering attended this year’s program. ATPESC has now hosted more than 600 participants since it began in 2013. To further extend the program’s reach, ATPESC lecture videos are made publicly available on YouTube each year.

Aurora Hackathons

The Argonne-Intel Center of Excellence (COE) continued to host hands-on training events to help Aurora Early Science Program (ESP) teams develop, port, and profile their applications for the ALCF’s upcoming exascale system. Events included a dungeon session, involving multiple ESP projects, and four hackathons. The multi-day, hands-on sessions pair ESP teams with experts from the ALCF and Intel to advance code development efforts using the Aurora software development kit, early hardware, and other exascale programming tools.

Aurora Workshop

This invitation-only workshop focused on helping ESP and ECP researchers prepare applications and software technologies for Aurora. The workshop was geared toward developers and emphasized using the Intel software development kit to get applications running on testbed hardware. Teams were also given the opportunity to consult with ALCF staff and provide feedback. The workshop kicked off in June with the initial sessions focused on presentations and status updates on Aurora’s hardware and software. ALCF staff also held dedicated office hours on a range of topics from programming models to profiling tools.

Best Practices for HPC Software Developers

In 2022, the ALCF, OLCF, NERSC, and ECP continued their collaboration with the Interoperable Design of Extreme-Scale Application Software (IDEAS) project to deliver a series of webinars—Best Practices for HPC Software Developers—to help users of HPC systems carry out their software development more productively. Webinar topics included the lab notebooks for computational mathematics, Openscapes for environmental science to help uncover data-driven solutions faster, managing academic software development, and how the ability to “import” a package is critical in enabling technology for software re-use.

DeepHyper Automated Machine Learning Workshop

In July, the ALCF hosted a hands-on training session on DeepHyper, a distributed automated machine learning (AutoML) software package for automating the design and development of deep neural networks for scientific and engineering applications. DeepHyper seeks to bring a scientifically rigorous automated approach to neural network model development by reducing the manually intensive, cumbersome, ad hoc, trial-and-error efforts. In this virtual workshop, the attendees learned various capabilities of the DeepHyper software to automate the design and development of neural networks.

GPU Hackathon

In July, the ALCF partnered with NVIDIA to host its GPU Hackathon for the second time, a virtual event designed to help developers accelerate their codes on ALCF resources using a portable programming model, such as OpenMP, or an AI framework of their choice. The multi-day virtual hackathon was the first event with access to ALCF’s Polaris system, an HPE Apollo Gen10+ machine equipped with NVIDIA A100 Tensor Core GPUs and AMD EPYC processors. A total of 11 teams participated this year, researching a vast array of topics including black hole imaging, fusion plasma dynamics, and the development of synthetic genes that will help predict the viral escape of SARS-CoV-2 genomes.

HDF5 Workshop

In August, the ALCF hosted a workshop focused on HDF5 parallel file system, its possible effects on HDF5 performance, and a summary of tools useful for performance investigations. The workshop was geared towards the ALCF’s Polaris system and used examples from well-known codes and use cases from HPC science applications in hands-on demonstrations. Participants in the workshop were shown cutting-edge HDF5 features, system specific considerations, and were provided significant hands-on assistance from ALCF experts.

Monthly ALCF Webinars

The ALCF continued to host monthly webinars consisting of two tracks: ALCF Developer Sessions and Aurora Early Adopters Series. ALCF Developer Sessions are aimed at training researchers and increasing the dialogue between HPC users and the developers of leadership-class systems and software. Speakers in the series included developers from NVIDIA and Argonne, covering topics such as getting started on Polaris, profiling with NVIDIA Nsight, and an introduction to HDF5. The Aurora Early Adopter Series is designed to introduce researchers to programming models, exascale technologies, and other tools available for testing and development work. Topics included bringing oneAPI to Python, CUDA to SYCL migration tool, and Intel extensions of Scikit-learn. Both webinar series are open to the public and videos of all talks are posted to the ALCF website and YouTube channel.

PythonFoam Workshop

In April, the ALCF hosted a hands-on training session for integrating OpenFOAM with Python. In the virtual workshop, attendees were instructed on coupling OpenFOAM, a general purpose CFD solver, with Python to enable in-situ data analyses and machine learning for computational physics.

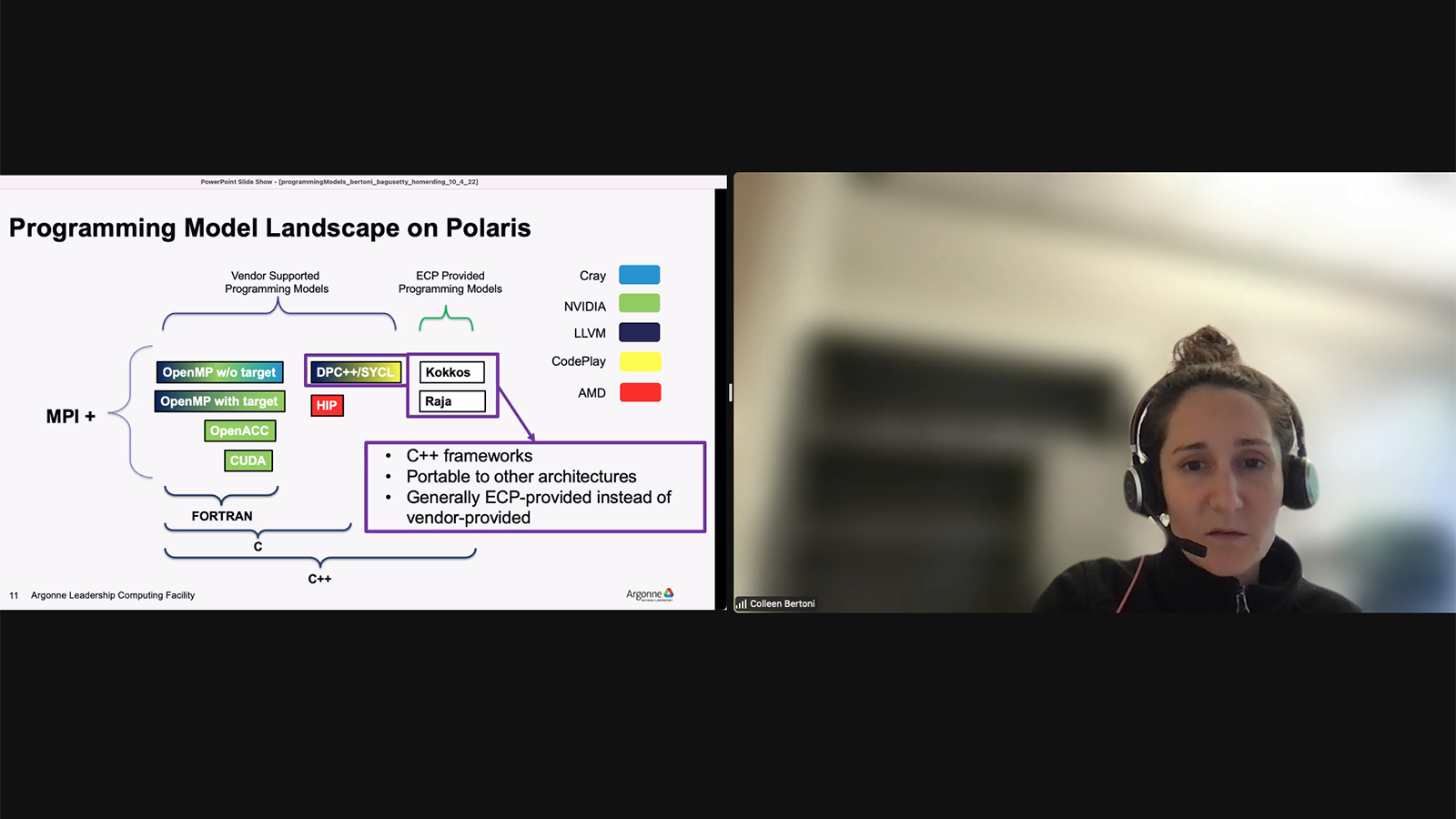

Simulation, Data, and Learning Workshop

In October, the ALCF hosted its annual Simulation, Data, and Learning (SDL) Workshop, a multi-day virtual event designed to help researchers improve the performance and productivity of simulation, data science, and machine learning applications on ALCF systems. Participants had the opportunity to learn about leading-edge AI methods and technologies while working directly with ALCF staff scientists during dedicated hands-on sessions. Over the course of the workshop, attendees learned how to use deep learning tools, program models on Polaris, and received an overview of ALCF’s Aurora supercomputer.